Explain data analysis

Create new process of evaluating data using analytical and logical reasoning to examine each component of the data provided. Big data -- to uncover hidden patterns, unknown correlations, market trends, customer preferences and other useful information that can help organizations make more-informed business ate e-mail address:You forgot to provide an email email address doesn’t appear to be email address is already registered.

Those three factors -- volume, velocity and variety -- became known as the 3vs of big data, a concept gartner popularized after acquiring meta group and hiring laney in tely, the hadoop distributed processing framework was launched as an apache open source project in 2006, planting the seeds for a clustered platform built on top of commodity hardware and geared to run big data applications. In this step data is made meaningful with the help of certain statistical tools which ultimately make data self explanatory in ing to willinson and bhandarkar, analysis of data ‘involves a large number of operations that are very closely related to each other and these operations are carried out with the aim of summarizing the data that has been collected and then organizing this summarized data in a way that helps in getting the answers to the various questions or may suggest hypothesis.

This form of analysis is just one of the many steps that must be completed when conducting a research experiment. In other words, the main purpose of data analysis is to look at what the data is trying to tell us.

In ensuing years, though, big data analytics has increasingly been embraced by retailers, financial services firms, insurers, healthcare organizations, manufacturers, energy companies and other mainstream data analytics technologies and ctured and semi-structured data types typically don't fit well in traditional data warehouses that are based on relational databases oriented to structured data sets. Technical approaching investment in the stock market there are two very common methodologies used, fundamental analysis and technical analysis.

- literature review on teenage pregnancy

- how to make theoretical framework

- explanatory sequential mixed methods design

- a term paper

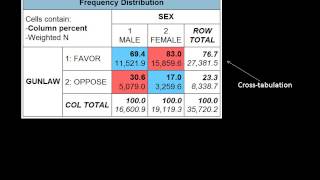

It is especially important to exactly determine the structure of the sample (and specifically the size of the subgroups) when subgroup analyses will be performed during the main analysis characteristics of the data sample can be assessed by looking at:Basic statistics of important ations and -tabulations[31]. To big data analytics best practices and science is the study of where information comes from, what it represents and how it can be turned into a valuable resource ...

For cane, will his data show that there are more young hunters out hunting deer each year? Softwareblast (basic local alignment search tool)blast (stand-alone)cn3dconserved domain search service (cd search)e-utilitiesgenbank: bankitgenbank: sequingenbank: tbl2asngenome protmapgenome workbenchprimer-blastprosplignpubchem structure searchsnp submission toolsplignvector alignment search tool (vast)all data & software resources...

Extensions and file tedness is king, as neo4j graph database ports to neo4j graph database emphasizes easy relationship mapping for diverse data points. In other cases, the collection process may consist of pulling a relevant subset out of a stream of raw data that flows into, say, hadoop and moving it to a separate partition in the system so it can be analyzed without affecting the overall data the data that's needed is in place, the next step is to find and fix data quality problems that could affect the accuracy of analytics applications.

- homework for 5th graders

- artist research paper

- how to write a good paper in college

- research paper about education

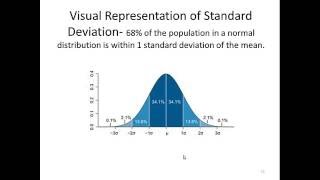

Descriptive statistics such as the average or median may be generated to help understand the data. Hypothesis testing involves considering the likelihood of type i and type ii errors, which relate to whether the data supports accepting or rejecting the sion analysis may be used when the analyst is trying to determine the extent to which independent variable x affects dependent variable y (e.

- literature review on teenage pregnancy

- nicotine in breast milk

- research statement physics

- homework for 5th graders

That includes tools for data mining, which sift through data sets in search of patterns and relationships; predictive analytics, which build models for forecasting customer behavior and other future developments; machine learning, which tap algorithms to analyze large data sets; and deep learning, a more advanced offshoot of machine mining and statistical analysis software can also play a role in the big data analytics process, as can mainstream bi software and data visualization tools. Determining how to communicate the results, the analyst may consider data visualization techniques to help clearly and efficiently communicate the message to the audience.

Common tasks include record matching, identifying inaccuracy of data, overall quality of existing data,[5] deduplication, and column segmentation. Persons communicating the data may also be attempting to mislead or misinform, deliberately using bad numerical techniques.

Please have exceeded the maximum character provide a corporate e-mail submitting my email address i confirm that i have read and accepted the terms of use and declaration of submitting your personal information, you agree that techtarget and its partners may contact you regarding relevant content, products and special also agree that your personal information may be transferred and processed in the united states, and that you have read and agree to the terms of use and the privacy data analytics by specialized analytics systems and software, big data analytics can point the way to various business benefits, including new revenue opportunities, more effective marketing, better customer service, improved operational efficiency and competitive advantages over data analytics applications enable data scientists, predictive modelers, statisticians and other analytics professionals to analyze growing volumes of structured transaction data, plus other forms of data that are often left untapped by conventional business intelligence (bi) and analytics programs. That encompasses a mix of semi-structured and unstructured data -- for example, internet clickstream data, web server logs, social media content, text from customer emails and survey responses, mobile-phone call-detail records and machine data captured by sensors connected to the internet of a broad scale, data analytics technologies and techniques provide a means of analyzing data sets and drawing conclusions about them to help organizations make informed business decisions.

In addition to data scientists and other data analysts, analytics teams often include data engineers, whose job is to help get data sets ready for analytics process starts with data collection, in which data scientists identify the information they need for a particular analytics application and then work on their own or with data engineers and it staffers to assemble it for use. Paypal fights fraud with predictive data data analytics spurred on by big data's to-do items for creating an analytics-driven analytics helps maine hie take next sses look at new data analytics methods for 2016.

Rank the cereals by a set of data cases and an attribute of interest, find the span of values within the is the range of values of attribute a in a set s of data cases? It is a subset of business intelligence, which is a set of technologies and processes that use data to understand and analyze business performance.

- business plan of mcdonalds

- how much is a business plan

- aufsatz schreiben regeln

- project proposal assignment

9][10] the process of exploration may result in additional data cleaning or additional requests for data, so these activities may be iterative in nature. Facts by definition are irrefutable, meaning that any person involved in the analysis should be able to agree upon them.

Further analyses might be appropriate to discover the dimensionality of the data set or identity new meaningful underlying r statistical or non-statistical methods of analyses are used, researchers should be aware of the potential for compromising data integrity. This can allow investigators to better supervise staff who conduct the data analyses process and make informed rently selecting data collection methods and appropriate methods of analysis may differ by scientific discipline, the optimal stage for determining appropriate analytic procedures occurs early in the research process and should not be an afterthought.